|

By Robert G. Jordan, NorthStar Inelligence In the AI world, it's not the size of the model in the fight, but the size of the fight in the model...

The AI language models (LLMs) landscape is undergoing a transformative shift, challenging our traditional beliefs about what makes a model effective.

Giants in the field like GPT-4 and PaLM 2 are leading this change, showing us that when it comes to language models, compactness may indeed be a virtue.

“Sooner or later, scaling of GPU chips will fail to keep up with increases in model size..."o, continuing to make models bigger and bigger is not a viable option." I've been down this road before, the road of grandiose promises and larger-than-life expectations. But as I dig deeper, I realize that the landscape is changing. The open-domain models that once promised a democratization of AI capabilities are falling short. They're like the bulky desktop computers of the '90s—impressive in their time but woefully inadequate now. Arvind Jain the CEO of Glean, a provider of an AI-assisted enterprise search engine, , echoes this sentiment. "The reality right now is the models that are in open domain are not very powerful. Our own experimentation has shown that the quality you get from GPT 4 or PaLM 2 far exceeds that of open-domain models."

So, why is smaller better?

The answer lies in the intricate balance between power and adaptability. Proprietary models like GPT-4 and PaLM 2 have managed to condense a wealth of information and capabilities into more compact forms. They're the sleek, modern laptops to the open-domain models' cumbersome desktops. They're not just powerful; they're versatile, adaptable, and far more efficient. But let's not kid ourselves—building a proprietary model is no walk in the park. It's a monumental task, one that requires a deep understanding of not just technology but also the nuances of language and human interaction. Jain himself admits that he hasn't come across a single client who has successfully built their own model. The challenges are manifold, from data collection and training to fine-tuning and implementation. Yet, the rewards are equally significant. A well-crafted proprietary model can revolutionize how we interact with technology, opening up new avenues for productivity and innovation. The question then arises: Is it worth the effort to build and train your own models?

According to Jain, the answer, at least for now, is a resounding no. The proprietary models have set the bar so high that anything less feels like a compromise. For general-purpose applications, it's not the right strategy to go down the rabbit hole of creating your own models. The time, resources, and expertise required far outweigh the potential benefits, especially when superior options are readily available. This brings me to my final point—the broader implications of this shift towards smaller, more powerful models. We're not just talking about a technological advancement; we're talking about a paradigm shift.

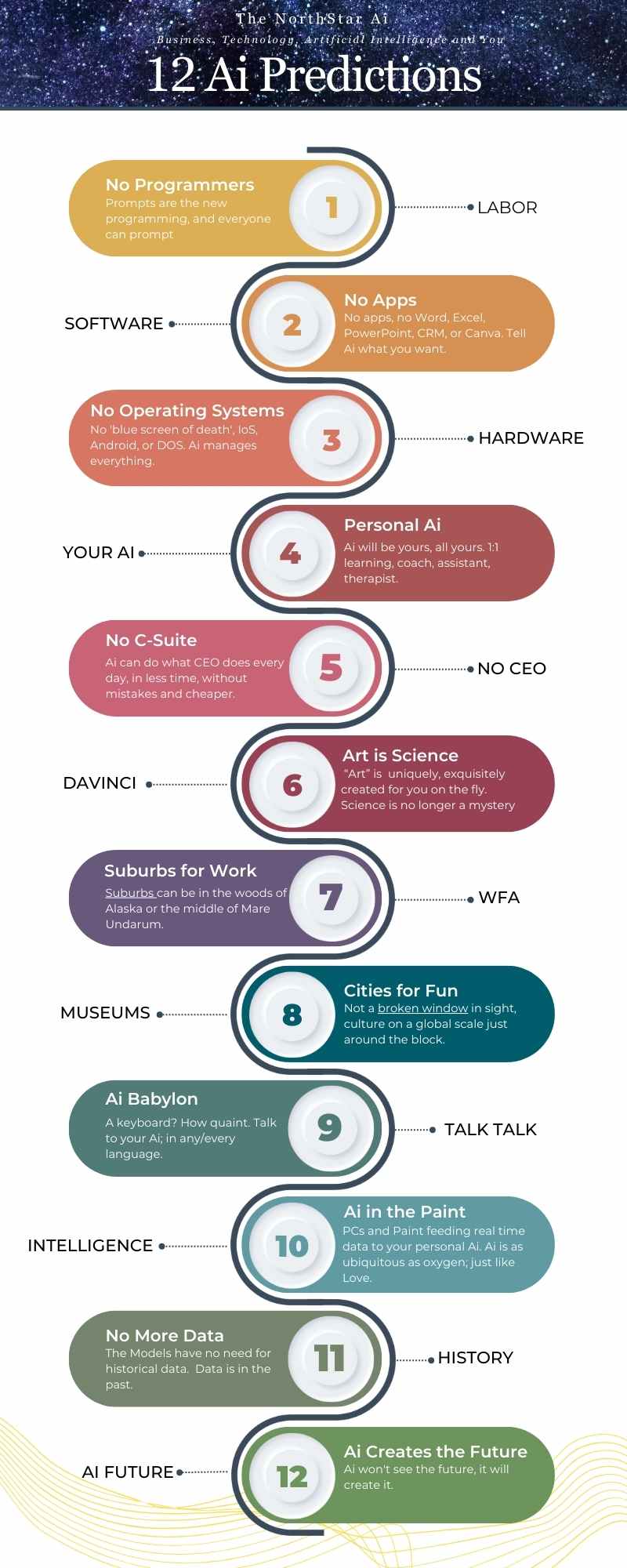

The ripple effects of this change will be felt far and wide, from how we conduct business to how we interact with each other and the world around us. The future of work is being reshaped before our very eyes, and technology is at the helm, steering us into uncharted waters. Summary (150 words): The article from Computerworld delves into the world of AI language models, emphasizing the need for them to become more compact. Currently, open-domain models are not as potent as their proprietary counterparts. Building proprietary LLMs is a daunting endeavor. Jain, a notable figure in the article, mentions that he hasn't encountered a single client who has successfully constructed their own model, even though many continue to experiment with the technology. He states, “The reality right now is the models that are in open domain are not very powerful. Our own experimentation has shown that the quality you get from GPT 4 or PaLM 2 far exceeds that of open-domain models.” Jain suggests that for general-purpose applications, constructing and training one's models isn't the right approach at present. Tweet: "Open-domain AI language models have room for improvement. Proprietary models like GPT-4 & PaLM 2 lead the way in quality. 🤖💡 #AILanguageModels #TechInsights" SEO-based Title: "Why Compact AI Language Models are the Future: A Deep Dive" SEO Post Description: "Explore the reasons behind the push for smaller AI language models and why proprietary models like GPT-4 and PaLM 2 are setting the benchmark." Introduction Paragraph for a LinkedIn Post: "In the ever-evolving realm of AI, size does matter. Dive into this insightful piece from Computerworld that sheds light on why the tech world is advocating for more compact AI language models and the undeniable prowess of proprietary models like GPT-4 and PaLM 2." Keyword List: AI, language models, proprietary LLMs, open-domain models, GPT-4, PaLM 2, technology, quality, experimentation Description of an Ideal Image: A split image showcasing a large, bulky traditional computer on one side and a sleek, modern, compact laptop on the other, symbolizing the transition from bulky to compact models. Search Question: "Why are compact AI language models considered better?" Title: "The Compact Revolution: AI Language Models in Focus" Funny Tagline: "Size matters, but in the world of AI, smaller is smarter!" Suggested Song that Matches the Theme: "Smaller" by John Mayer

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

Topics & Writers

All

AuthorsGreg Walters Archives

July 2024

|